Shader Live Coding

Because I'm often maximalist when it comes to overlapping concepts (especially regarding digital art), I decided to

restrict myself to the simple concept of masking a colored background with solid shapes, whose outlines grow and shrink

according to music intensity. Sticking to that goal was rather fun, and forced me to think harder about how to create

interesting silhouettes. I had a lot of fun playing around with how different angles interact with each other.

It really shocked me how simple manipulating a grid of squares was—the Book of Shaders stressing using the step()

function made things a ton easier. From there, adapting the Hexler sine wave example using changes in rotation and

frequency as well as using 3D Perlin noise to create a pretty, morphing background fit well into the aesthetic I was

going for.

As is with all graphics programming, this took me far longer than I expected (and about in line with your time estimate).

I think I clocked in at about 5 hours. Lots of pacing around the room thinking of ways to layer effects.

(Gallery coming soon. I need to go to bed.)

Video • Code

Video Processing

When finding inspiration for different types of noise to incorporate into video, voronoi noise stuck out to me as

one extremely capable of replicating real-life natural effects. I noticed that with some pretty simple modifications,

it resembles human skin cells (hence its inclusion into the category of 'cell noise'). Actually getting the voronoi

texture to morph in a way that felt natural took a long time, since I tried my best not to rely on the code snippets

provided in our reading. The exception to this was the 'random' function, which I was unable to figure out to do in a

different way.

By analyzing the brightness value of each pixel, I created a threshold to switch from the regular camera output to

the cell output. From there, all that was to color the voronoi color palette to resemble skin, and add some ways for

users to interact with the effect and mess around with the parameters. Creating silly instructions with pseudoscientific

results was the cherry on top!

I set my scope pretty early on, so I was able to finish this in around the same time it took me to do the live coding

assignment. Clocked in at 5.5 hours.

Project • Code 1 • Code 2

Reaction Diffusion

This one was a toughie. Hit probably four major roadblocks where I was slamming my head against the wall for an hour

or so. One involved the workgroup size, one was surrounding the pingpong feature, one was some mysterious behavior

involving array indices not displaying correctly, and the final one was difficulties importing uniforms. The end result

did end up looking lovely—I kept it simple by populating the field with B chemicals wherever the user clicked, and allowing

them to change the basic parameters of the reaction. I used my tried-and-true method of slapping a cute color gradient

over a white background. I think I'm accidentally building my visual brand image!

This one took a while! Over the course of 3 or 4 days, I probably put in about 6 or 7 hours.

Project (code can be read via inspect -> sources)

Particles

I drew inspiration from screensavers for this one. I love watching little dots or shapes moving around in organic ways

while I've been toiling away at a math problem for an hour. The simple behavior of fish seemed fitting to implement using

a compute shader-I represented each fish using a position, velocity, and rotation, converting from polar to cartesian

in order to render them. The angle of the fish in relation to others as well as their distance were used to decide whether

the fish should turn towards or away. The values are fairly sensitive, but it creates some cool swarming patterns! There

are a few parameters that I decided to not make controls for; attract/avoid radii and weight can break the simulation

shockingly easily. But, implementing fish size, particle count, and speed controls was easy enough!

Pretty sure this one was the quickest out of the assignments so far. Probably 4 or 5 hours?

Project (code can be read via inspect -> sources)

Vants

For the custom behavior, I implemented an exploding vant that proceeds straight until it hits a pheromone, gets scared,

and blasts away all of the pheromones within a specific radius before turning left. It was pretty tricky, since I had to

change how the coordinate system worked pretty significantly for the effect to work properly and to iron out some bizarre

behavior that would arise if an explosive vant walked off the screen and wrapped around.

This one took about 3 hours!

Project (code can be read via inspect -> sources)

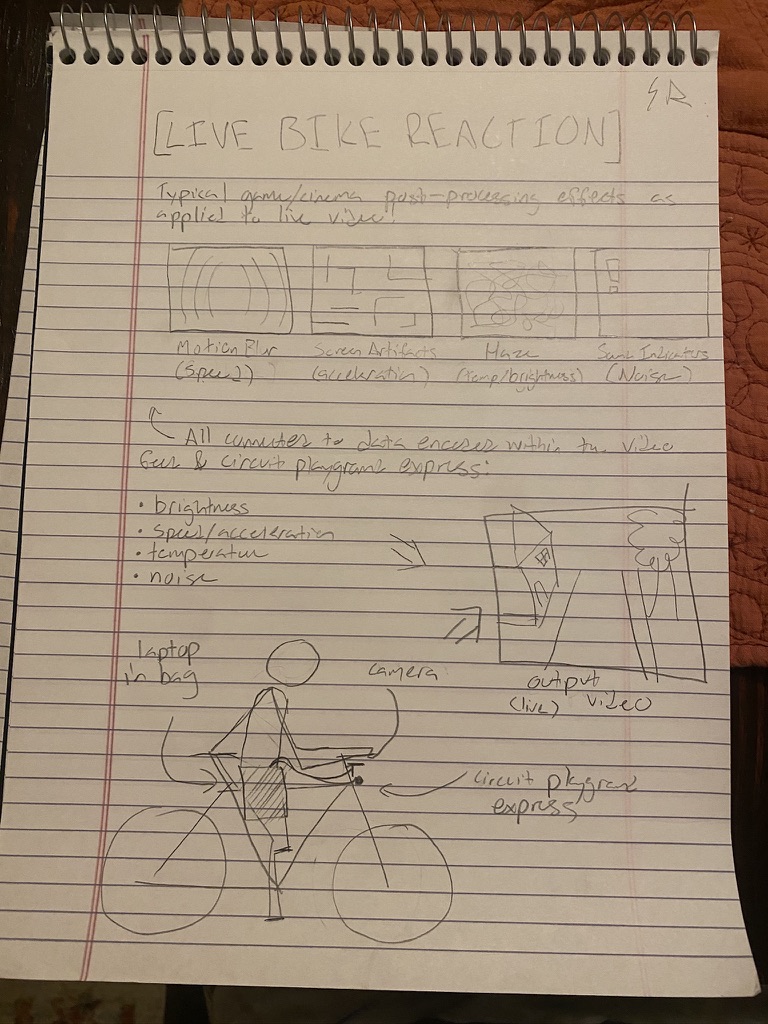

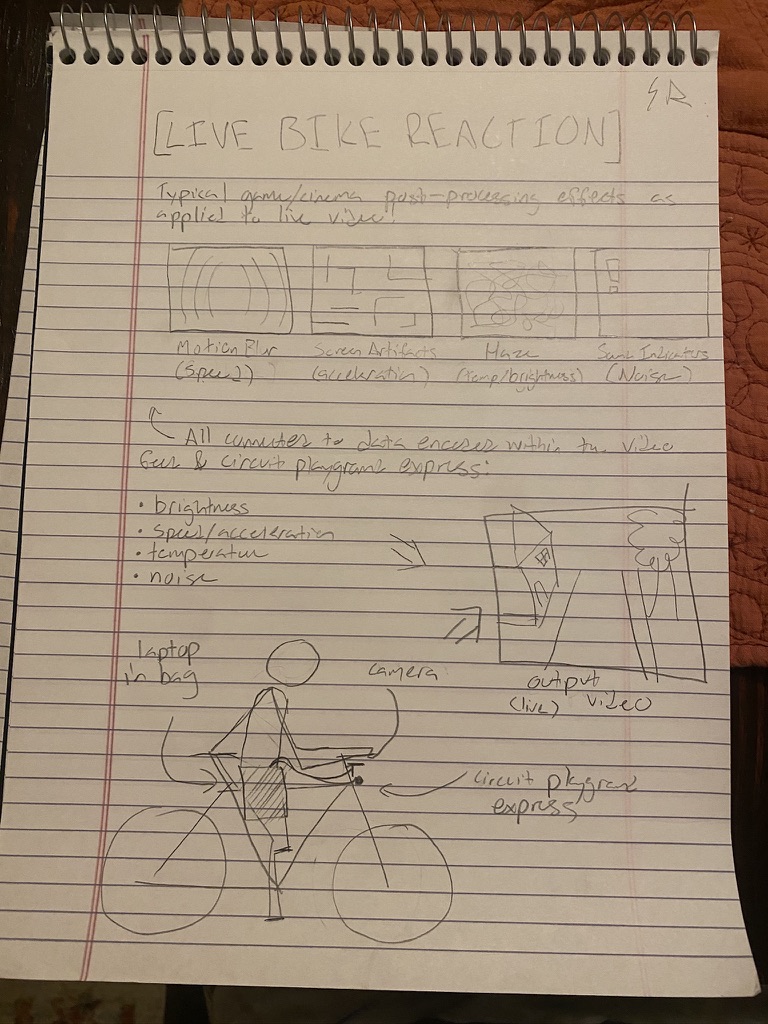

[LIVE BIKE REACTION]

My final project! I've always been inspired by post-processing effects in games, especially those that not only increase

immersion, but encode information about the game state and communiucate it seamlessly to the player. It's a much more

fun way to create an immersive user interface when the art style and mechanics allow it. In order to make a boring bike

through campus or Elm Park more interesting, I decided to create a set of post-processing effects linked to sensor data

and apply them to live video!

In order to gather sensor data, I used a Circuit Playground Express, which is a tiny board with many embedded sensors

including an accelerometer, microphone, temperature sensor, and photosensor. Additionally, a webcam is used to generate

live video. Serial communication through USB is then used to transmit information to a webpage using the JavaScript

serial API, aggregating the information into an internal state. Finally, Seagulls is used to pull from this state and

pass it along into a fragment shader, which applies post-processing effects to the video.

The first effect I included was a "wild-west" heat distortion effect I created for Interactive Electronic Arts, which I

thought would be great to include in this project. That effect is simply a combination of a couple simple color and video

manipulation techniques: Perlin noise to replicate a fiery haze, horizontal "scan lines" resembling digital distortion,

sinusoidal video warping to resemble heat waves, and a bump in contrast to emphasize the rest of the visual effects.

The "warp speed" effect was easy to implement graphically--it's just simple video feedback--but differentiating between

consistent acceleration and sharp bumps using a pretty poor accelerometer was tricky. I ended up adding to a "velocity"

value for each frame the acceleration was over a specific threshold, and having it decay naturally.

Volume was similar, being much easier to implement on the shader side. I had to take a short sample of audio and use that

as a baseline to compare the rest of my audio records to, using a log scale to convert into decibels and using trial and

error to choose a threshold. In the shader, it was just a gradient on the far edges of the screen, using a soft blue color.

Pixel sorting was the trickiest effect to implement. I originally tried to do it using a compute shader, but after a ton of

experimentation within Seagulls, I realized that it did a ton of black magic behind the scenes which prevented feedback,

video input, and compute shaders from working simultaneously. I ended up finding a

really cool article

detailing an extremely flexible and simple method that worked within a fragment shader. Getting it functioning was a ton of fun!

The effect works by detecting an acceleration value above a certain threshold and setting a 50-frame timer for when the effect

would run. Bumping the board again within that time resets the timer.

Absolutely no clue how long this one took. Probably 15-20 hours?

Code • Video

Code • Video